Day 5: The Fuzzy Edges of Character Encoding

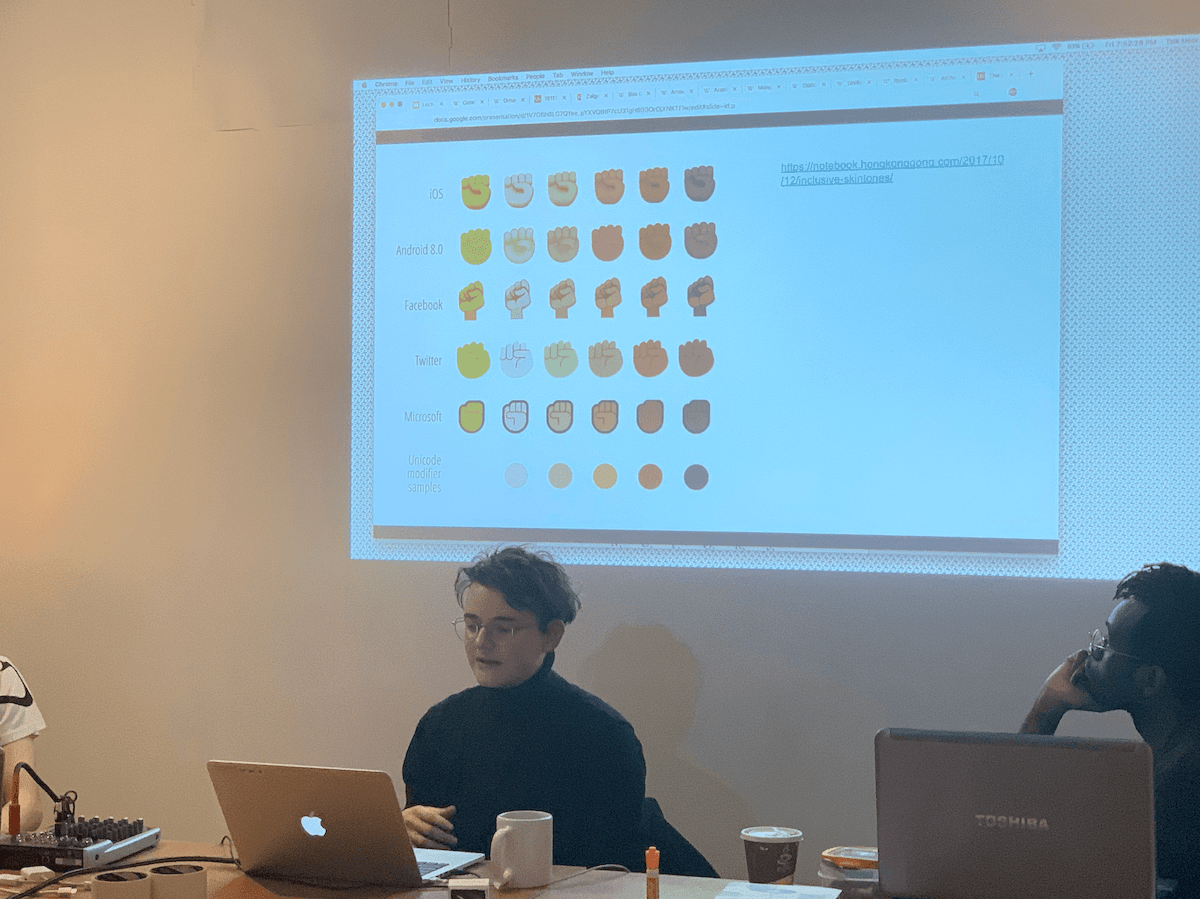

taught by Everest Pipkin

Syllabus

Day 5 of Code Societies was an evening full of the history, politics, and computational basics of text-based character encoding taught by Everest Pipkin.

In previous technology-based classes and workshops I have attended, people have neglected to talk about character encoding. In coding classes, I was told to just make sure to never forget to include <meta charset=”UTF-8”> No one has ever explained why until this class.

How does something that it is so relevant to the inner workings of computers and the ways in which we communicate with each other all around the world get glossed over or worse not even mentioned?

My fave bits of history shared by Everest:

Computers were human for a long time.

Computers weren’t always dominated by men! Early computers performed calculations and mostly women were contracted to do that computational work.

The term “computer bug” literally came from earlier days when bugs would get stuck in the machines.

The first message sent on the telegraph in morse code was “what hath god wrought.”

The census used to take about seven years total to process and was done via Jacquard loom punch cards, which is pretty wild considering the census helps determine the number of seats your state occupies in the U.S. House of Representative and federal funding for infrastructure and public services. Imagine how delayed they were then getting people the things they needed.

Now for one of the most important things to note - everything on our computers has a set of numbers associated with it! These words you are reading on the screens are all connected to numbers! Even the photos in this blog are connected to numbers.

Everest then went over types of encoding languages that were created as communication forms, things such as drum languages, smoke signals, tap codes, and morse code. But once computers and people around the world needed to communicate more with each other, there was a push to make things more standardized - otherwise, all our messages would be encoded in a way that might make them hard to or impossible to understand if sent to a computer that had a different language system.

UTF-8

In 1868, ASCII, a system of 128 characters was created, but that was not enough to represent different languages, so this system was expanded during the Unicode Consortium who created UTF-8!

With the standardization of languages being a goal, there are many questions on the effects of digitization. Our class brought up questions of what it means to, for instance, digitize indigenous languages. I am curious about who gets to make the decisions around digitization and who gets to proclaim the supposed benefits to doing so. Also, in what ways can characters be mapped incorrectly in other languages or in what ways does this system not accommodate other languages? The risky part of this system is that there is a definite capacity that cannot be crossed so how does this capacity and limitation affect new languages that others might want to digitize? These are questions on my mind and I am curious about how things will change over time as language and needs adapt.

Glitch Art

Character encoding encompasses more than the “written” text or what we read on screens. Character encoding also informs other mediums and formats such as images, sound, and video. All of these formats can have their encoding manipulated in order to create glitches.

Every file on our computer can be converted to plain text readable material. This is a form of data bending, which essentially is the act of opening a file in a program that was designed to open a different kind of file. Data bending is something I would personally like to experiment with more and see the potentiality of effects that can be created on all kinds of file formats.

One way to do this is to open up a file in text edit or a hex editor like hex fiend. Doing so allows you to manipulate its content at a code level.

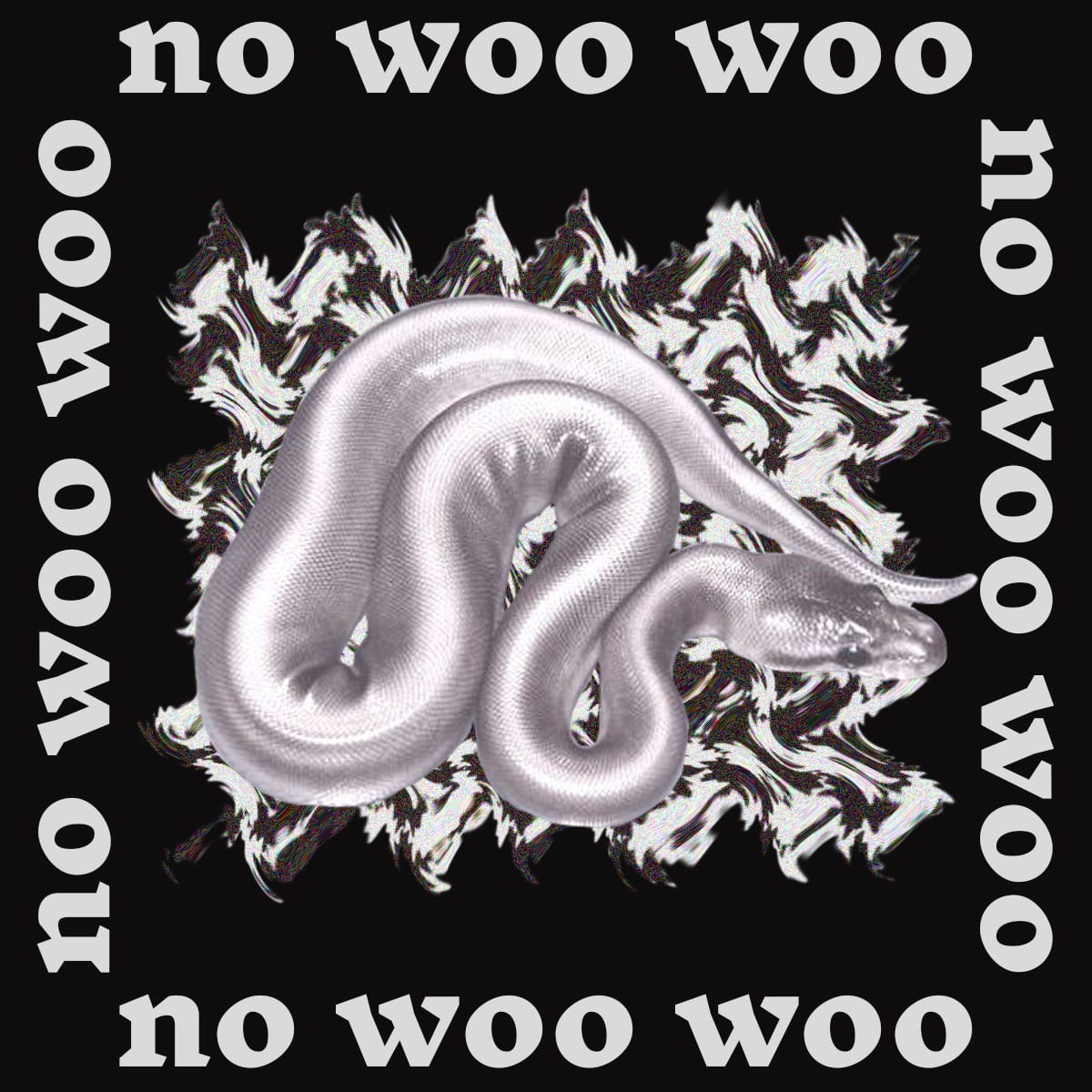

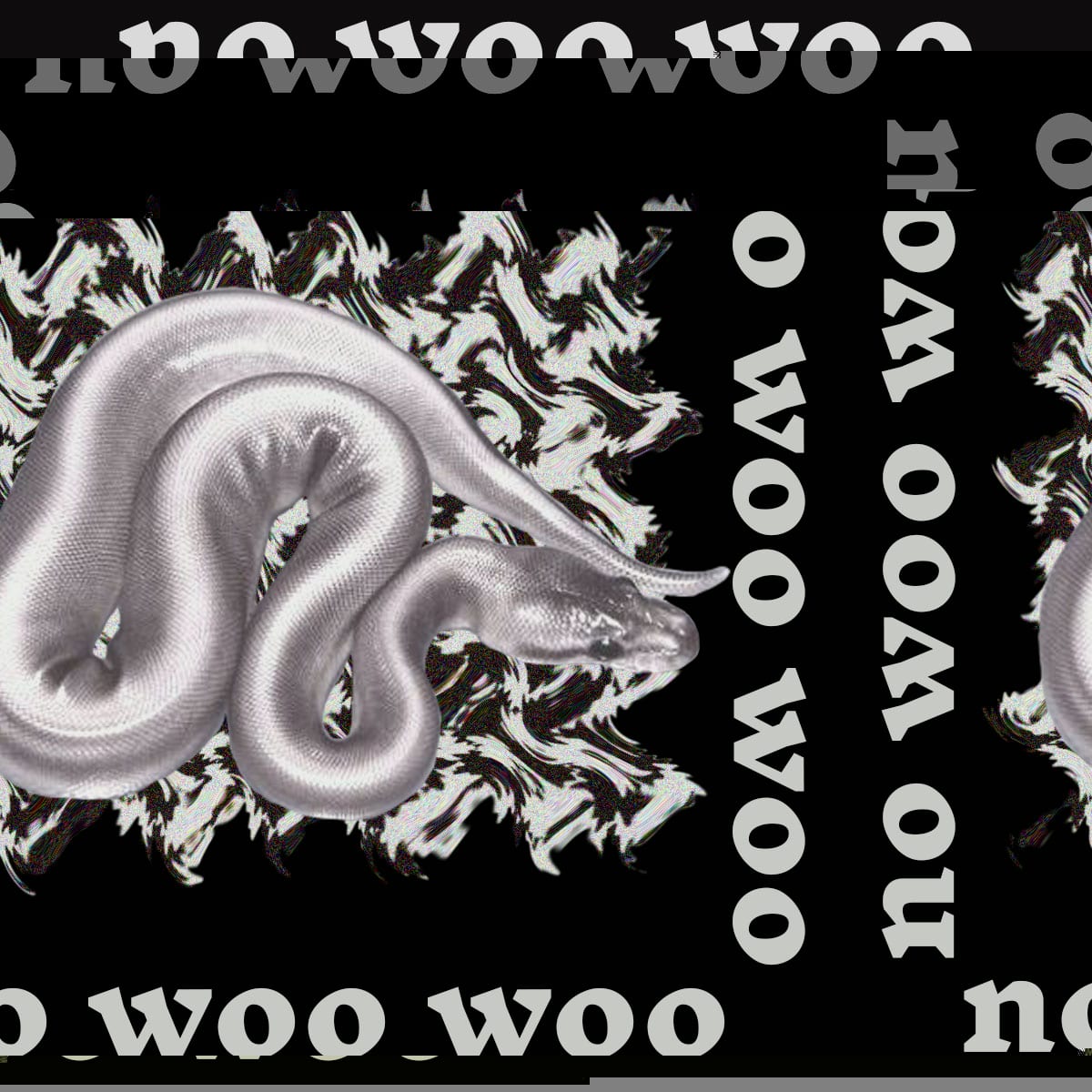

Right before the class, I made a little graphic image to advertise a bi-weekly radio show I had so I input that into hex fiend and manipulated by deleting/erasing in three different areas to see various types of results. We were instructed to not alter the very beginnings or very ends of the text files, as they might have key information that lets our computer know what kind of file we are working with. The results of this process were pretty awesome! In the past, as a kid, I was interested in glitch art but had relied on using a different phone or computer apps to accomplish the results from pre-set “glitch filters” that were created and now I finally know how to create my own effects.

Initial Image

First Glitch

Second Glitch

Third Glitch

At the moment, I am manipulating things quite randomly and so there is also a huge element of surprise every time I save the file and open to preview it. Sometimes you manipulate the file too much and it becomes extremely corrupted and unable to open.

This class in many ways feels like exploring the DNA of a complex machine that I use every day. Understanding the inner-workings not only makes me appreciate its communicative affordances but also helps me have more control over how to use these features to create interesting effects outside of pre-designed programs.

Written by Cy X